Better decisions create better outcomes — but speed alone doesn’t make them better. The future of decision-making lies in systems that combine dynamic context, structured frameworks, and real collaboration. AI already has the intelligence; what’s missing is the structure to make it truly useful for the work that matters most.

Tobias Lütke recently put words to something many of us have felt building with LLMs:

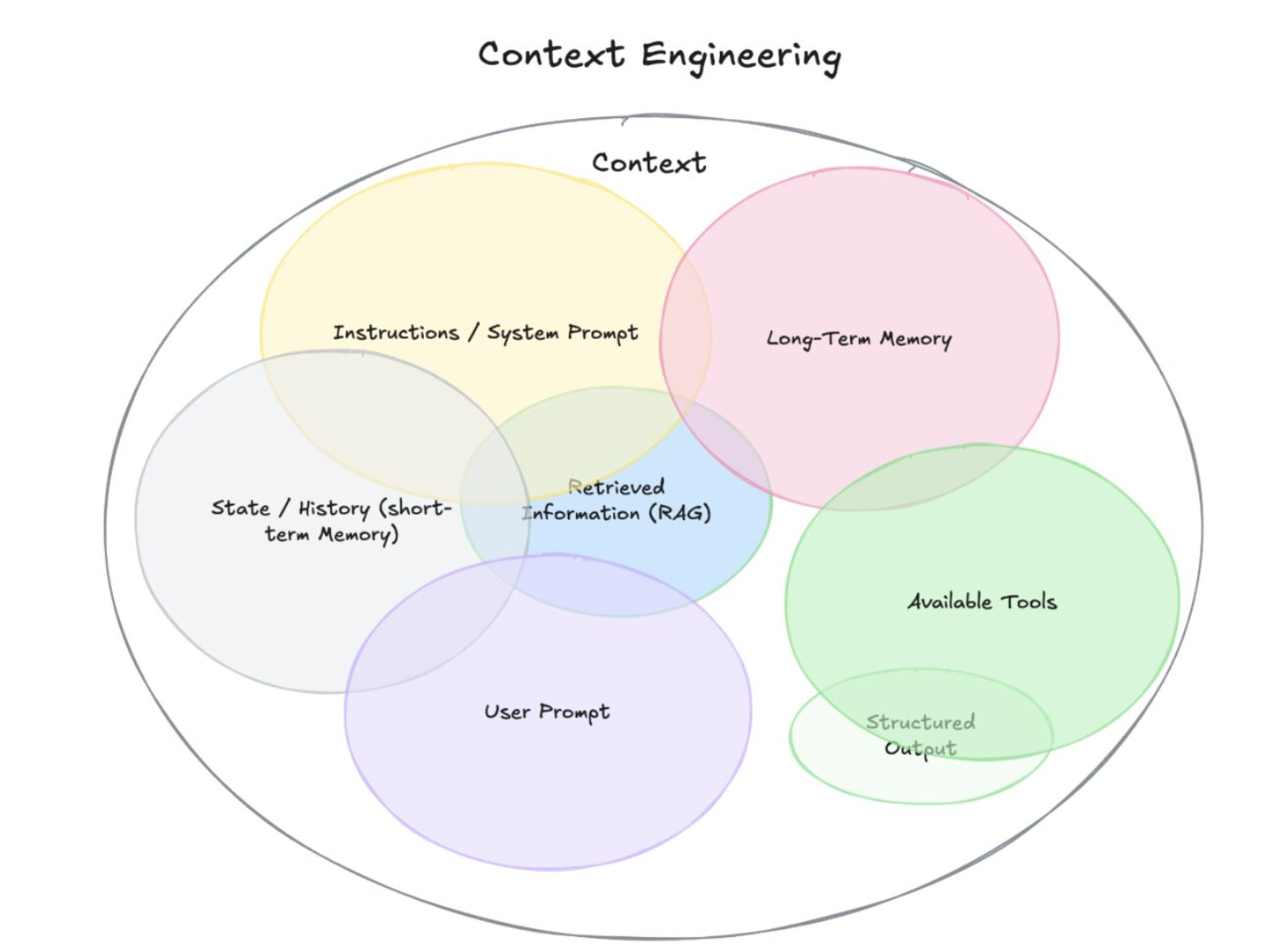

“I really like the term ‘context engineering’ over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM.”

This shift is key.

To me, prompt engineering helps you get the most out of what the model already knows, while context engineering expands the scope of the conversation—bringing in your data, tools, history, and goals. That shift unlocks new capabilities and incredible value, but also demands a more deliberate design of the environment around the model.

The real power comes from shaping the full context:

– What the model remembers

– What tools and data it can access

– What prior work it sees

– How outputs are structured

– And how all of that evolves over time

Philipp Schmid laid this out in one of the clearest ways I’ve seen, showing how things like RAG, memory, state, and structured outputs are all part of the same design layer: context engineering. The better your context layer, the more capable and aligned your AI becomes.

That’s what we’re building at Hubs.is.

A platform where AI assistants don’t start from scratch every time. They share your goals, retain memory, understand the state of your work, and grow alongside your team. Context isn’t fringe and ephemeral — it’s continuous and center of target.

Scott Wiener, CEO and Founder at Hubs

Latest insights from our articles

.png)

.jpg)

AI can make us faster and more capable, but not wiser. True expertise comes from experience — from judgment, context, and time. In the AI era, greatness belongs to those who can connect machine intelligence with real-world nuance.